Some startups don’t die because their product sucks. They die from infrastructure decisions they made six months ago when they had three users and a dream.

Your MVP is one of those decisions. And what I learned the hard way is that the infrastructure that gets you your first ten clients will actively prevent you from getting your next hundred.

This has nothing to do with building the “perfect” product. It’s about recognizing when your foundation stops enabling growth and starts choking it.

Let me show you how to spot this before it costs you six months.

Why your MVP actually matters

You’re a founder. You need to move fast, test ideas and get to market before you run out of money.

That’s why MVPs exist. Build the minimum thing that proves the concept, then iterate.

But the truth is MVPs are designed to break. They’re supposed to be temporary. The danger is when they become permanent.

Think about it this way. Your product is a house, features are the rooms, and your MVP is the foundation.

Add all the rooms you want - if the foundation can’t support the weight, the whole thing comes down.

When we started Ken in February 2024, I built the first version in two weeks. Learned coding with AI, threw together scripts, stored everything in CSV files.

Someone on my team had to physically type shell commands every morning to run campaigns. It was ugly. But it worked.

A couple of months, we were approaching $1M in revenue but that same scrappy MVP that helped us hit that number was killing us.

Our database couldn’t process data anymore, campaigns were delayed and my team was waking up at 2 AM to manually run programs just to keep things working.

We duplicated our entire system across 10 different browser tabs and ran it like that for two months just to deliver what clients paid for.

That’s when you know your MVP has become a liability. When it’s actively preventing you from doing your job.

The three stages most startups go through

Most startups follow the same pattern with their MVP. The only question is whether you recognize which stage you’re in before it’s too late.

Stage 1: Whatever works

You need to prove the concept fast. Elegant architecture doesn’t matter yet. You just need something functional enough to test if anyone actually wants this thing.

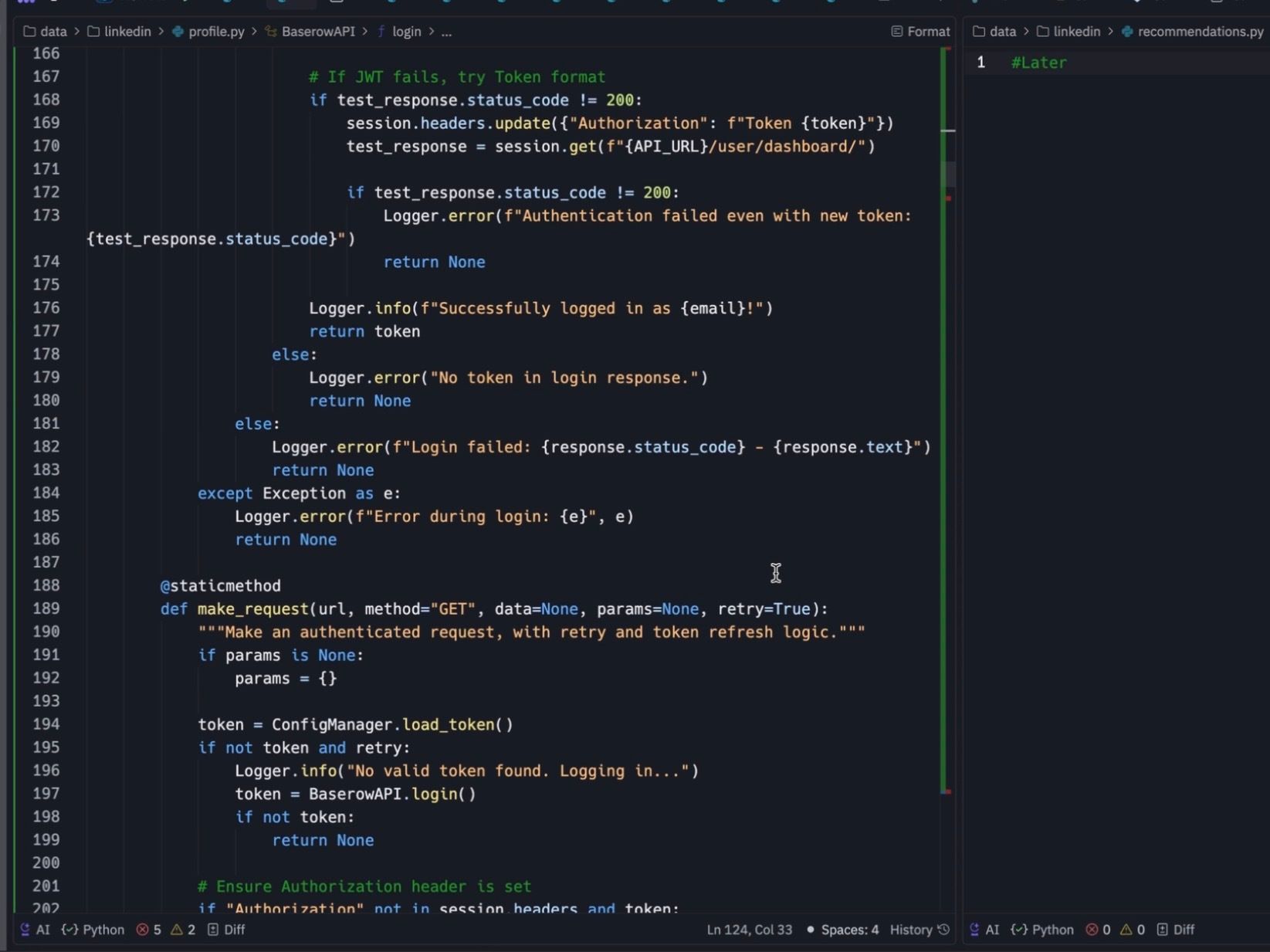

Ken started with CSV files and JSON. I skipped the cloud database and proper architecture. The code could send personalized emails and that’s all that mattered.

This is actually smart, because at this stage you don’t know if anyone wants what you’re building.

Spending three months architecting the perfect system makes no sense if you’re pivoting next week.

Use the simplest thing that lets you test your idea and duct tape it together. The scrappiest solution that proves the concept is the right choice.

Stage 2: Good enough

You’ve got customers now. They’re paying. You need something more reliable than scripts and manual processes.

But you can’t spend months rebuilding infrastructure either. This is where most startups patch their MVP.

Maybe you move to a better database. Maybe you hire someone to clean up the code. Maybe you build some automation so your team isn’t running things manually.

For Ken, we moved our database from CSV files to Baserow in November. It handled everything we needed for six months. We onboarded clients, ran campaigns, scaled revenue.

Stage 3: Built for scale

Your growth is hitting infrastructure limits. Features take forever to ship and your team spends more time keeping the lights on than building new things.

This is when you rebuild. Not because it’s broken. Because the opportunity cost of NOT rebuilding is bigger than the cost of doing it.

We hit this in March 2024. Needed four months and a complete rewrite. New database architecture, new code, everything from scratch. It sucked, but the alternative was worse.

What holding onto your MVP too long costs you

Let’s get specific. Because “technical debt” sounds abstract until you calculate what it’s costing you every week.

You can’t deliver anymore

When your infrastructure can’t handle the load, you can’t do what customers pay you for.

For Ken, this meant we physically couldn’t onboard more clients. We had demand but our system wouldn’t let us take it.

My team was running shell scripts manually every morning. We were duplicating programs across browser tabs.

We were adding workarounds on top of workarounds just to keep campaigns running. That’s not a business. That’s a nightmare.

Development grinds to a halt

Every new feature takes three times longer because you’re fighting broken architecture. Your team spends most of their time maintaining the old system instead of building new stuff.

For us, this was brutal. We couldn’t add features clients wanted because every change broke something else, simple updates took hours and we were moving backwards.

You lose your lead

Ken was nine months ahead of our competition in March. By September when we finished rebuilding, that lead had shrunk to six months. We lost ground while standing still.

Our competitors kept shipping features while we were stuck rebuilding the foundation.

It almost breaks your team

Managing two systems at once nearly killed us. You’re maintaining the old broken thing while building the new thing. You’re doing double the work with divided focus.

Here’s what it looked like for us: May to September 2024. Zero new features. Zero improvements. Four straight months of just rebuilding what we already had. But we stayed ahead. Barely.

When to rebuild

Timing is everything here. Too early and you waste time you don't have. Too late and you're screwed. Here's how to know when it's time.

The red line: you can’t deliver consistently

This is non-negotiable. If your infrastructure is actively preventing you from fulfilling your core promise to customers, you’re out of time.

For us, this was when our database couldn’t process leads anymore, campaigns wouldn’t run and data wouldn’t update.

We couldn’t do the thing clients paid us for. That’s your signal.

The yellow line: development slows way down

Every feature takes forever now. Your team can’t ship fast anymore. Technical debt is compounding daily.

Do this calculation: how much slower is development now versus six months ago? If it’s 2-3x slower, you’re approaching the red line.

You can afford the transition

Rebuilding only works if you can maintain the old system while building the new one. That needs either team capacity, runway, or both.

We could do this because we’d hired developers in March and April. We had the people to split focus. If we didn’t, we would’ve waited.

Where’s your competition?

How far behind are they? How long until they catch up to your current level?

Our calculation: competition was 9 months behind. Rebuild would take 4 months. Math worked. If they were 3 months behind, it would’ve been a different decision.

What a rebuild actually looks like

Let me get practical here. Because "rebuild your infrasturcture" sounds simple until you're in it.

Phase 1: Get someone who knows what they're doing

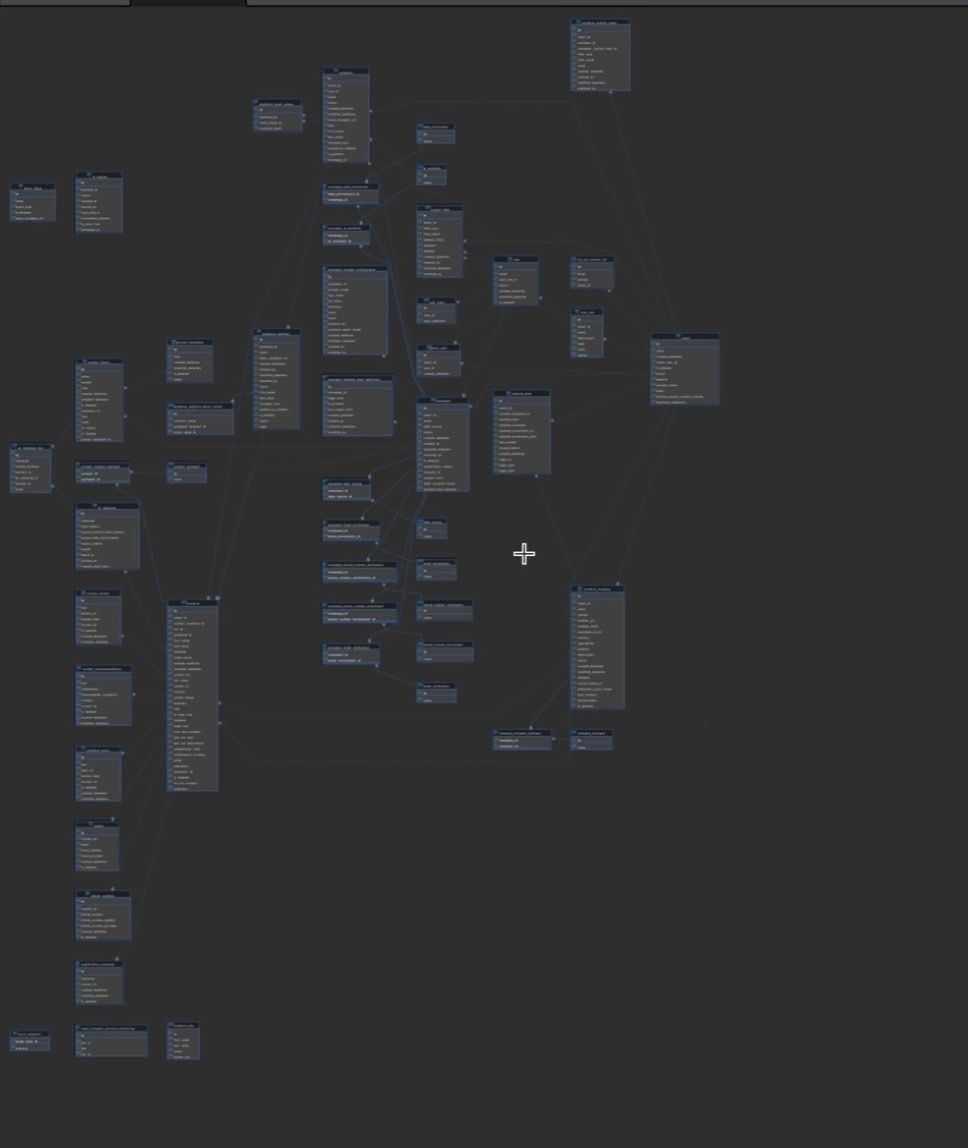

For Ken, this meant finding one of the best database engineers I could through my network. Because I’m not a database guy. I’m a product person who can code enough to be dangerous.

You’re not designing for current scale. You’re designing for 10x current scale. Because by the time you finish building, you’ll be bigger than you are now.

We went from processing thousands of leads to needing to handle hundreds of millions of records. The new system has 460 data points. It’s built to double every two months.

Phase 2: Build while the house is still standing

This is where it gets brutal. You can't turn off the old system. You're building the new one while keeping the old one running.

Your team splits, some people keep the lights on, others build the future. Nobody can fully focus on either.

We spent four months here (May to September). Most stressful period we've had.

Phase 3: Move everything without breaking everything

Migrating data from old system to new system. Then testing. Then testing again. Then finding the bugs you missed.

This takes longer than you think. Double your estimate and add two weeks. You'll still be wrong.

Phase 4: Launch and put out fires

The new system goes live. Things break that you didn't expect. You fix them fast. First month is basically triage. But if you designed it right, things stabilize.

What you should do right now

Here's where you probably are and what to do.

Pre-launch or under 100 users: Don't overthink this. Use whatever gets you to market fastest. I'm serious. CSV files worked for us.

Growing but infrastructure feels fine: Start tracking delivery consistency and development velocity. When either drops 30%, start planning.

Hitting infrastructure limits: Calculate your competition timeline. How long until they catch up? Can you rebuild before that?

Infrastructure is actively broken: You’re past the decision point. Start hiring. Begin planning. You’re out of time.

What this comes down to

The MVP that gets you started isn’t the infrastructure that scales. That’s not a problem. That’s just how startups work.

Your job is knowing when to transition. Not too early where you’re optimizing for problems you don’t have yet. Not too late where you’re stuck rebuilding under pressure. Somewhere in between, right before it starts blocking your growth.

We almost waited too long and it cost us four months and some of our competitive lead. But we caught it before it killed us.

I timed it right by maybe two weeks. Any later and we would’ve lost clients. Any earlier and we would’ve wasted months building for scale we didn’t need yet.

You’re setting a timer whether you know it or not. And when it goes off, you better be ready to rebuild everything or watch it all fall apart.

What are you dealing with right now? MVP decisions, infrastructure problems, technical debt you know you need to fix but keep putting off?

Hit reply and tell me. I’m collecting these for the next issue and I’ll dig into whatever comes up most.

Cristian - Founder @Ken